How do you make a computer within a computer game?

Hello once again

So another month has passed, and I've noticed I have been doing a ton of menus. The Title Menu, The Main Menu, The Settings Menu, the Credits Menu, the Pause Menu, the Settings Menu in the Pause menu, which is just a copy of the Settings Menu from the Main Menu but you still need to make sure things are hooked up correctly or else things like your mouse sensitivity will cause errors on the menu or not update in the game, and a whole system to link them all together, and it's still not done! Games have a lot more menus than most people realize, and those menus have a lot going into them to make them work as nicely as they do. Hopeless Forager is no different, and that's especially true when it comes to The Terminal!

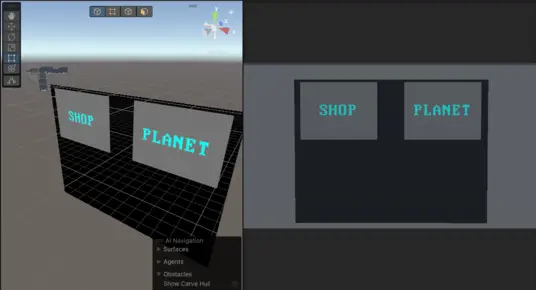

The Terminal is going to be the In-Game computer that the player can use to control and buy stuff. So, as such it needs more menus to help the player achieve those goals. However, The terminal is a very special. Unlike the previous menus which could just use Unity's built in Graphics Ray Caster and UI Elements to achieve my goals of having user intractability the Terminal is a Render Texture showing off a World Space Camera that is far away from the player. This is a problem because much like an actual screen you can't actually interact with the UI that will be displayed on that screen. Which of course means that any menus that are displayed on said terminal are essentially completely inaccessible to the player, Unless there's some hoops they can jump through to ray cast off into the void and click the UI, but that is the definition of bad game design.

So, to break down the problem to its simplest form, the problem that is being faced in this scenario is that we are displaying a button somewhere where the button, and very importantly it's hitbox, isn't. So the solution is that there needs to be a way to bridge that gap between where we expect the raycast to land, and where the buttons hitbox is. The easiest solution is to simply move the hitbox to where the raycast should land, and this is a perfectly good solution. If you lock the players perspective you'd never be able to tell. However, it loses some of the customizability of turning a canvas into an actual screen. So I took the second approach.

The second approach is where when the Render Texture is hit by a raycast, it would take it and proceed to turn that into a fake raycast on the Canvas. Thankfully Unity has plenty of stuff to help with that endeavor. Unity has the ability to create events, determine where exactly someone clicked on a texture, and the ability to link two objects together via serialization. Assuming your camera is lined up correctly, and it's on an object, it's possible to get a normalized position of where the mouse clicked on the texture. From there the object can start an event over in the canvas and give it the normalized position. This position can be used in conjunction with the length and width of the camera to get you exactly where you would have clicked on the UI if it was there. From there all that's left is to inject a new Pointer Data Event, share some data with the UI Elements, and then boom, it's like that is a canvas.

This is an oversimplification however, so if you'd like to learn more about it or see someone code it out for yourself here is the person who I used to help me get this effect: Unity Tutorial: Creating interactive in-game screens by Iain McManus

Until next time!

Hopeless Forager

Can you survive the next ejection?

| Status | In development |

| Authors | arcane-ground games, HavocZhou, Lusenio Incidium |

| Genre | Survival |

| Tags | Action-Adventure, Horror, Singleplayer, Space |

More posts

- Issues when moving the ship between scenesMar 01, 2024

- How to handle itemsFeb 24, 2024

- Press and Hold interactions for playerFeb 24, 2024

- The Audio SystemFeb 17, 2024

- AI perception system reworkFeb 17, 2024

- Item system revamp and the struggles that came with itFeb 02, 2024

- Item Transfer Update and ExpansionFeb 02, 2024

- Issues with interaction raycast and player model offsetJan 27, 2024

- Problems with carrying items from scene to sceneJan 27, 2024

Leave a comment

Log in with itch.io to leave a comment.